Advanced Security Patterns and Implementation

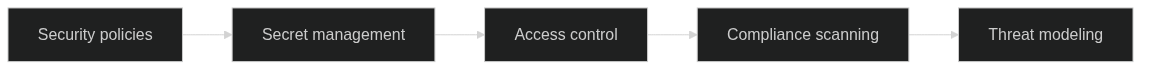

Building upon the security fundamentals established in the previous chapter, this chapter explores advanced security architecture patterns, practical implementations for European environments, and emerging trends that will shape the future of security in Architecture as Code.

Advanced security architecture patterns

Security orchestration and automated incident response

Modern enterprises require orchestrated security operations to manage the volume and speed of contemporary threats. Manual incid ent response cannot scale when attacks develop within minutes. Security Orchestration, Automation, and Response (SOAR) platforms transform incident handling into proactive, automated workflows. Predefined playbooks support automated containment, evidence c ollection, stakeholder notifications, and impact assessments.

Integrating SOAR with Architecture as Code allows infrastructure-level responses. Compromised components can be isolated or redep loyed from known-good definitions. Network policies adjust automatically to contain lateral movement. Backup restoration process es can be triggered based on compromise indicators. Threat intelligence feeds using STIX/TAXII formats add context for faster, m ore accurate decisions.

AI and machine learning in security architectures

Artificial intelligence and machine learning augment security programmes with pattern recognition and anomaly detection at scale . Behavioural analytics establish baselines for users, applications, and network traffic; deviations trigger investigations or pr eventive actions. User Behaviour Analytics (UBA) helps detect insider threats through subtle access changes.

Automated threat hunting uses AI models trained on historical data to identify potential compromises before they escalate. Organi sations must also defend the AI systems themselves. Adversarial machine learning techniques can target models, requiring controls such as input sanitisation, model validation, and monitoring for adversarial indicators.

Multi-cloud security strategies

Multi-cloud adoption improves resilience and reduces vendor lock-in but introduces policy complexity. Unified policy management l ayers translate organisational requirements into provider-specific implementations. Policy-as-code frameworks must support multi ple providers simultaneously to maintain consistent posture.

Identity federation enables single sign-on and coherent access control. Cloud-native identity services such as Azure Active Dire ctory or AWS IAM should integrate with on-premises and third-party directories. Data governance must address residency, cross-bo rder transfer restrictions, and varying encryption capabilities through automated classification-aware controls.

Security observability and analytics patterns

Comprehensive observability underpins effective detection and response. Centralised log aggregation, normalisation, and stream pr ocessing deliver real-time detection while supporting historical investigations. Key performance indicators—mean time to detect (M TTD), mean time to respond (MTTR), false positive ratios, control coverage, and compliance drift—provide quantitative measures of programme effectiveness.

Automating threat modelling with observability data allows continuous refinement of models based on observed behaviour. Emerging attack patterns can be identified and mitigated before they are fully weaponised.

Emerging security technologies and future trends

Quantum computing presents both opportunity and threat. Organisations must prepare for quantum-resistant cryptography using NIST guidance and incorporate algorithm agility into Architecture as Code frameworks. Zero-knowledge proofs enable privacy-preservin g authentication and authorisation, which can be integrated via code-driven approaches. Distributed and self-sovereign identity solutions reduce reliance on central providers, while confidential computing and trusted execution environments (TEEs) protect d ata during processing—even from cloud operators.

Practical implementation: security architecture in European environments

Secure Infrastructure as Code state management pattern

State backends hold the canonical inventory for every deployed component and therefore demand layered protection. Authoritative vendor documentation defines the required controls for production programmes, with HashiCorp's guidance on securing Terraform state providing the baseline for compliant operations (Source [16]):

- HashiCorp – “Securing Terraform State” (2024): mandates remote backends with state locking so sensitive data is never synchronised to developer laptops and concurrent writes are prevented (Source [16]).

- HashiCorp – “Backend Type: s3” (2024): details the

dynamodb_table,encrypt, andkms_key_idsettings that provide DynamoDB-backed locking, server-side encryption, and versioning for Amazon S3 state (Source [17]). - HashiCorp – “Terraform Security Best Practices” (2023): codifies HashiCorp's overarching enterprise guidance on secrets management, encryption, and policy guardrails for Terraform estates (Source [20]).

- Microsoft Learn – “Store Terraform state in Azure Storage” (2024): highlights Azure Storage accounts with encryption at rest, Azure AD or SAS access controls, and blob leases to serialise Terraform operations (Source [18]).

- Google Cloud – “Store Terraform state in Cloud Storage” (2024): directs teams to enable uniform bucket-level access, object versioning, and customer-managed encryption keys for Terraform state buckets (Source [19]).

Architecture as Code teams should standardise on encrypted S3, Azure Storage, or Google Cloud Storage backends, applying customer-managed encryption keys, DynamoDB or blob lease locking, and object versioning to support forensic recovery (Sources 16, 17, 18, and 19). Codifying these controls in Terraform modules and policy-as-code checks ensures every workspace inherits the verified practices rather than bespoke conventions.

Key management policies must define custodianship, rotation cadence, and break-glass processes for decrypting state artefacts. Backends should enforce least-privilege policies so only automation roles can read state while human operators rely on Terraform Cloud or approved pipelines for access. Cataloguing these controls within the Architecture as Code governance layer links state protection to wider compliance commitments; policy-as-code checks can assert that every workspace declares an approved backend, encryption flag, and locking store before plans are applied.

Operational telemetry from backend access logs, Terraform Cloud audit trails, and key management systems should be forwarded into the central governance dashboard. This provides evidence for auditors that state files remain encrypted, access attempts are monitored, and remediation actions—such as key rotation or state re-keying—are triggered automatically when drift is detected (Sources 16 and 20).

Comprehensive security foundation module

The following Terraform module demonstrates a foundational enterprise security pattern tailored for European organisations. It applies defence-in-depth principles through automated controls for encryption, access management, audit logging, and threat detection.

# modules/security-foundation/main.tf

terraform {

required_providers {

# provider definitions omitted for brevity

}

}

# Security baseline for European organisations

# Aligns with ENISA guidance for critical infrastructure and enforces GDPR compliance

locals {

security_tags = {

SecurityBaseline = "eu-baseline"

ComplianceFramework = "iso27001-gdpr"

DataClassification = var.data_classification

ThreatModel = "updated"

SecurityContact = var.security_team_email

Organization = var.organization_name

Environment = var.environment

}

# European security requirements based on ENISA and EDPB guidance

required_encryption = true

audit_logging_required = true

gdpr_compliance = var.data_classification != "public"

backup_encryption_required = var.data_classification in ["internal", "confidential", "restricted"]

# Approved EU regions for European data protection programmes

approved_regions = ["eu-north-1", "eu-west-1", "eu-central-1"]

}

# Organisation-wide master encryption key implementing GDPR Article 32 controls

resource "aws_kms_key" "org_key" {

description = "Master encryption key for ${var.organization_name}"

customer_master_key_spec = "SYMMETRIC_DEFAULT"

key_usage = "ENCRYPT_DECRYPT"

deletion_window_in_days = 30

# Automated rotation in line with Swedish security expectations

enable_key_rotation = true

# Granular key policy implementing least privilege access

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Sid = "Enable IAM User Permissions"

Effect = "Allow"

Principal = {

AWS = "arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

}

Action = "kms:*"

Resource = "*"

},

{

Sid = "Allow CloudWatch Logs Encryption"

Effect = "Allow"

Principal = {

Service = "logs.${data.aws_region.current.name}.amazonaws.com"

}

Action = [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

]

Resource = "*"

Condition = {

ArnEquals = {

"kms:EncryptionContext:aws:logs:arn" = "arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:*"

}

}

},

{

Sid = "Allow S3 Service Access"

Effect = "Allow"

Principal = {

Service = "s3.amazonaws.com"

}

Action = [

"kms:Decrypt",

"kms:GenerateDataKey"

]

Resource = "*"

Condition = {

StringEquals = {

"kms:ViaService" = "s3.${data.aws_region.current.name}.amazonaws.com"

}

}

}

]

})

tags = merge(local.security_tags, {

Name = "${var.organization_name}-master-key"

Purpose = "data-encryption"

RotationSchedule = "annual"

})

}

# Zero Trust security group with explicit outbound rules only

resource "aws_security_group" "secure_application" {

name_prefix = "${var.application_name}-secure-"

vpc_id = var.vpc_id

description = "Zero Trust security group for ${var.application_name}"

# No inbound traffic by default (implicit deny)

# Explicit rules must be added per workload requirement

egress {

description = "HTTPS for external API calls and software updates"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

description = "DNS queries for name resolution"

from_port = 53

to_port = 53

protocol = "udp"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

description = "NTP for time synchronisation (essential for log integrity)"

from_port = 123

to_port = 123

protocol = "udp"

cidr_blocks = ["0.0.0.0/0"]

}

tags = merge(local.security_tags, {

Name = "${var.application_name}-secure-sg"

NetworkSegment = "application-tier"

SecurityLevel = "high"

})

}

# Comprehensive audit logging aligned with GDPR Article 30

resource "aws_cloudtrail" "security_audit" {

count = local.audit_logging_required ? 1 : 0

name = "${var.organization_name}-security-audit"

s3_bucket_name = aws_s3_bucket.audit_logs[0].bucket

event_selector {

read_write_type = "All"

include_management_events = true

data_resource {

type = "AWS::S3::Object"

values = ["${aws_s3_bucket.audit_logs[0].arn}/*"]

}

data_resource {

type = "AWS::KMS::Key"

values = [aws_kms_key.org_key.arn]

}

}

event_selector {

read_write_type = "All"

include_management_events = false

data_resource {

type = "AWS::Lambda::Function"

values = ["arn:aws:lambda"]

}

}

enable_log_file_validation = true

is_multi_region_trail = true

is_organization_trail = var.is_organization_master

kms_key_id = aws_kms_key.org_key.arn

cloud_watch_logs_group_arn = "${aws_cloudwatch_log_group.cloudtrail_logs[0].arn}:*"

cloud_watch_logs_role_arn = aws_iam_role.cloudtrail_logs_role[0].arn

tags = merge(local.security_tags, {

Name = "${var.organization_name}-security-audit"

Purpose = "compliance-audit-logging"

RetentionPeriod = "7-years"

})

}

resource "aws_s3_bucket" "audit_logs" {

count = local.audit_logging_required ? 1 : 0

bucket = "${var.organization_name}-security-audit-logs-${random_id.bucket_suffix.hex}"

tags = merge(local.security_tags, {

Name = "${var.organization_name}-audit-logs"

DataType = "audit-logs"

DataClassification = "internal"

Purpose = "compliance-logging"

})

}

This module applies best practices for key management, Zero Trust networking, and audit logging to meet European regulatory expectations. KMS key rotation is automated, security groups enforce a default deny posture, and CloudTrail delivers tamper-evident logging for compliance validation.

Advanced GDPR compliance implementation

Policy as Code can express GDPR requirements in executable form. The following Open Policy Agent example shows how Article 32 ca n be translated into automated checks.

# policies/gdpr_compliance.rego

package european.gdpr

import rego.v1

# GDPR Article 32 – ensure appropriate technical and organisational measures

personal_data_encryption_required if {

input.resource_type in ["aws_rds_instance", "aws_s3_bucket", "aws_ebs_volume", "aws_dynamodb_table"]

contains(input.attributes.tags.DataClassification, "personal")

not encryption_enabled

}

# Helper rules for specific resource types (omitted for brevity)

# ...

Advanced threat detection platform

"""Advanced threat detection for Swedish organisations"""

import asyncio

import aiohttp

import boto3

import hashlib

import json

import logging

import pandas as pd

from dataclasses import dataclass

from datetime import datetime, timedelta

from enum import Enum

from typing import Dict, List, Optional

class ThreatSeverity(Enum):

"""Threat severity levels aligned with ENISA guidance"""

LOW = "low"

MEDIUM = "medium"

HIGH = "high"

CRITICAL = "critical"

@dataclass

class SecurityFinding:

"""Structured representation of a security finding"""

finding_id: str

title: str

description: str

severity: ThreatSeverity

affected_resources: List[str]

indicators_of_compromise: List[str]

remediation_steps: List[str]

compliance_impact: Optional[str]

detection_timestamp: datetime

source_system: str

class AdvancedThreatDetection:

"""Comprehensive threat detection following European best practice"""

def __init__(self, region: str = "eu-north-1", threat_intel_feeds: Optional[List[str]] = None) -> None:

self.region = region

self.cloudtrail = boto3.client("cloudtrail", region_name=region)

self.guardduty = boto3.client("guardduty", region_name=region)

self.config = boto3.client("config", region_name=region)

self.sns = boto3.client("sns", region_name=region)

self.ec2 = boto3.client("ec2", region_name=region)

self.iam = boto3.client("iam", region_name=region)

self.threat_intel_feeds = threat_intel_feeds or []

self.ioc_database: Dict[str, Dict] = {}

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

self.logger = logging.getLogger(__name__)

async def detect_advanced_persistent_threats(self, hours_back: int = 24) -> List[SecurityFinding]:

"""Correlate multiple data sources to detect potential APT activity"""

findings: List[SecurityFinding] = []

end_time = datetime.now()

start_time = end_time - timedelta(hours=hours_back)

suspicious_activities = await self._correlate_threat_indicators(start_time, end_time)

lateral_movement = await self._detect_lateral_movement(start_time, end_time)

privilege_escalation = await self._detect_privilege_escalation(start_time, end_time)

data_exfiltration = await self._detect_data_exfiltration(start_time, end_time)

for activity in suspicious_activities:

if self._calculate_threat_score(activity) > 0.7:

finding = SecurityFinding(

finding_id=f"APT-{hashlib.md5(str(activity).encode()).hexdigest()[:8]}",

title="Potential Advanced Persistent Threat Activity",

description=f"Correlated suspicious activities indicating potential APT: {activity['description']}",

severity=ThreatSeverity.CRITICAL,

affected_resources=activity["resources"],

indicators_of_compromise=activity["iocs"],

remediation_steps=[

"Immediately isolate affected resources",

"Initiate forensic investigation",

"Review potential lateral movement",

"Restore from verified secure backup",

"Increase monitoring for related indicators",

],

compliance_impact="Potential GDPR Article 33 notification (72-hour requirement)",

detection_timestamp=datetime.now(),

source_system="Advanced Threat Detection",

)

findings.append(finding)

return findings

async def monitor_gdpr_compliance_violations(self) -> List[SecurityFinding]:

"""Continuously monitor for GDPR compliance violations"""

findings: List[SecurityFinding] = []

unusual_data_access = await self._analyse_data_access_patterns()

unauthorised_transfers = await self._detect_unauthorised_data_transfers()

retention_violations = await self._check_data_retention_compliance()

for violation in [*unusual_data_access, *unauthorised_transfers, *retention_violations]:

findings.append(

SecurityFinding(

finding_id=f"GDPR-{violation['type']}-{violation['resource_id'][:8]}",

title=f"GDPR Compliance Violation: {violation['type']}",

description=violation["description"],

severity=ThreatSeverity.HIGH,

affected_resources=[violation["resource_id"]],

indicators_of_compromise=violation.get("indicators", []),

remediation_steps=violation["remediation_steps"],

compliance_impact=f"GDPR {violation['article']} violation – regulatory action possible",

detection_timestamp=datetime.now(),

source_system="GDPR Compliance Monitor",

)

)

return findings

async def assess_supply_chain_risks(self) -> List[SecurityFinding]:

"""Evaluate supply chain risks from third-party dependencies"""

findings: List[SecurityFinding] = []

container_risks = await self._scan_container_vulnerabilities()

api_risks = await self._assess_third_party_apis()

dependency_risks = await self._analyse_infrastructure_dependencies()

for risk in [*container_risks, *api_risks, *dependency_risks]:

severity = ThreatSeverity.CRITICAL if risk["cvss_score"] > 7.0 else ThreatSeverity.HIGH

findings.append(

SecurityFinding(

finding_id=f"SUPPLY-{risk['component']}-{risk['vulnerability_id']}",

title=f"Supply Chain Risk: {risk['component']}",

description=risk["description"],

severity=severity,

affected_resources=risk["affected_resources"],

indicators_of_compromise=[],

remediation_steps=risk["remediation_steps"],

compliance_impact="Potential impact on EU data protection regulations",

detection_timestamp=datetime.now(),

source_system="Supply Chain Risk Assessment",

)

)

return findings

def generate_executive_security_report(self, findings: List[SecurityFinding]) -> Dict[str, Dict]:

"""Generate an executive-level report with regulatory context"""

critical_findings = [f for f in findings if f.severity == ThreatSeverity.CRITICAL]

high_findings = [f for f in findings if f.severity == ThreatSeverity.HIGH]

total_affected_resources = len({resource for finding in findings for resource in finding.affected_resources})

gdpr_notifications_required = len(

[f for f in findings if f.compliance_impact and "GDPR Article 33" in f.compliance_impact]

)

report = {

"executive_summary": {

"total_findings": len(findings),

"critical_severity": len(critical_findings),

"high_severity": len(high_findings),

"affected_resources": total_affected_resources,

"gdpr_notifications_required": gdpr_notifications_required,

"report_period": datetime.now().strftime("%Y-%m-%d"),

"overall_risk_level": self._calculate_overall_risk(findings),

},

"regulatory_compliance": {

"gdpr_compliance_score": self._calculate_gdpr_compliance_score(findings),

"enisa_compliance_score": self._calculate_enisa_compliance_score(findings),

"required_notifications": self._generate_notification_recommendations(findings),

},

"threat_landscape": {

"apt_indicators": len([f for f in findings if "APT" in f.finding_id]),

"supply_chain_risks": len([f for f in findings if "SUPPLY" in f.finding_id]),

"insider_threat_indicators": len([f for f in findings if "INSIDER" in f.finding_id]),

},

"remediation_priorities": self._prioritise_remediation_actions(findings),

"recommendations": self._generate_strategic_recommendations(findings),

}

return report

async def automated_incident_response(self, finding: SecurityFinding) -> Dict[str, List[str]]:

"""Execute automated incident response aligned with European procedures"""

response_actions: List[str] = []

if finding.severity == ThreatSeverity.CRITICAL:

if any("ec2" in resource.lower() for resource in finding.affected_resources):

await self._isolate_ec2_instances(finding.affected_resources)

response_actions.append("EC2 instances isolated from the network")

if any("s3" in resource.lower() for resource in finding.affected_resources):

await self._restrict_s3_access(finding.affected_resources)

response_actions.append("S3 bucket access restricted")

await self._notify_security_team(finding, urgent=True)

await self._notify_compliance_team(finding)

response_actions.append("Critical stakeholders notified")

await self._preserve_forensic_evidence(finding)

response_actions.append("Forensic evidence preserved")

incident_id = await self._create_incident_record(finding, response_actions)

self.logger.info("Automated response completed for finding %s (incident %s)", finding.finding_id, incident_id)

return {

"incident_id": incident_id,

"response_actions": response_actions,

"next_steps": finding.remediation_steps,

}

# Additional helper methods (_correlate_threat_indicators, _detect_lateral_movement, etc.) would be implemented here.

Future security trends and technical evolution

Quantum-ready cryptography, AI-enhanced security tooling, and privacy-preserving computation will shape the next decade of securi ty architecture. Organisations should invest in algorithm agility, machine learning governance, and privacy engineering skills to stay ahead of emerging threats. Zero-knowledge proofs, confidential computing, and distributed identity solutions will become in creasingly relevant as regulatory regimes demand stronger privacy guarantees.

Strategic security recommendations for European organisations

European enterprises should align security investments with regulatory duties, the evolving threat landscape, and transformation objectives. Participation in European collaboration forums—such as the European Union Agency for Cybersecurity (ENISA), CERT-EU, and sector-specific information sharing groups—strengthens threat intelligence and coordinated response capabilities.

Closing the cybersecurity skills gap is essential. Investment in training programmes, professional certifications, and academic partnerships ensures access to the expertise required to support ambitious digital initiatives.

Summary and future development

Architecture as Code represents the future of infrastructure management for European organisations. Security within this paradigm is a transformative shift from reactive, manual approaches to proactive, automated safeguards embedded throughout development. Zero Trust principles, policy automation, and codified security patterns allow teams to version-control, test, and deploy security decisions with the same rigour applied to functional requirements.

Automated compliance streamlines complex regulatory obligations spanning GDPR, NIS2 Directive, and industry-specific mandates. Advanced patterns—particularly those highlighted in Section 10.6—illustrate how orchestration, AI-assisted detection, and multi-cloud strategies can scale security for large enterprises.

Organisations that embrace Architecture as Code security practices position themselves for successful digital transformation whi le maintaining a strong security posture. Investments in security automation reduce incident rates, accelerate compliance valida tion, and improve operational efficiency. Preparing for future trends—automation, AI augmentation, and quantum-ready defences—req uires adaptable, code-driven frameworks capable of evolving alongside new technologies and threats.

Delivering these outcomes demands organisational commitment to a DevSecOps culture, sustained investment in skills, and a discpl ined approach to continuous improvement. When implemented well, Architecture as Code security enables both enhanced protection a nd accelerated innovation.

Sources and references

Academic sources and standards

- NIST. Cybersecurity Framework Version 1.1. National Institute of Standards and Technology, 2018.

- NIST. Special Publication 800-207: Zero Trust Architecture. National Institute of Standards and Technology, 2020.

- NIST. Post-Quantum Cryptography Standardisation. National Institute of Standards and Technology, 2023.

- ENISA. Cloud Security Guidelines for EU Organisations. European Union Agency for Cybersecurity, 2023.

- ISO/IEC 27001:2022. Information Security Management Systems – Requirements. International Organisation for Standardisation.

European authorities and regulatory sources

- EDPB. Guidelines on Data Protection by Design and by Default. European Data Protection Board, 2023.

- ENISA. NIS2 Directive Implementation Guidance. European Union Agency for Cybersecurity, 2023.

- European Commission. Regulation (EU) 2022/2554 on Digital Operational Resilience (DORA). Official Journal of the European Union, 2022.

- EBA. Guidelines on ICT and Security Risk Management. European Banking Authority, 2023.

- Directive (EU) 2016/679. General Data Protection Regulation. Official Journal of the European Union.

Technical standards and frameworks

- OWASP. Application Security Architecture Guide. Open Web Application Security Project, 2023.

- Cloud Security Alliance. Security Guidance v4.0. Cloud Security Alliance, 2023.

- CIS Controls v8. Critical Security Controls for Effective Cyber Defence. Centre for Internet Security, 2023.

- MITRE ATT&CK Framework. Enterprise Matrix. MITRE Corporation, 2023.

Industry references

- Amazon Web Services. AWS Security Best Practices. AWS Security Documentation, 2023.

- Microsoft. Azure Security Benchmark v3.0. Microsoft Security Documentation, 2023.

- HashiCorp. Securing Terraform State. HashiCorp Developer Documentation, 2024. https://developer.hashicorp.com/terraform/cloud-docs/state/securing

- HashiCorp. Terraform Security Best Practices. HashiCorp Learning Resources, 2023. https://developer.hashicorp.com/terraform/cloud-docs/recommended-practices/security

- HashiCorp. Backend Type: s3. HashiCorp Developer Documentation, 2024. https://developer.hashicorp.com/terraform/language/settings/backends/s3

- Microsoft Learn. Store Terraform state in Azure Storage. Microsoft Learn Documentation, 2024. https://learn.microsoft.com/en-gb/azure/developer/terraform/store-state-in-azure-storage

- Google Cloud. Store Terraform state in Cloud Storage. Google Cloud Documentation, 2024. https://cloud.google.com/docs/terraform/resource-management/store-terraform-state

- Open Policy Agent. OPA Policy Authoring Guide. Cloud Native Computing Foundation, 2023.

- Kubernetes Project. Pod Security Standards. Kubernetes Documentation, 2023.

European organisations and expertise

- ENISA. Threat Landscape Report 2023. European Union Agency for Cybersecurity, 2023.

- CERT-EU. Cybersecurity Threat Landscape Report 2023. Computer Emergency Response Team for the EU Institutions.

- European Cyber Security Organisation. European Cybersecurity Survey 2023. ECSO.

- EU Agency for Cybersecurity. Cybersecurity Research Publications. ENISA Technical Reports.

International security organisations

- SANS Institute. Security Architecture Design Principles. SANS Institute, 2023.

- ISACA. COBIT 2019 Framework for Governance and Management of Enterprise IT. ISACA, 2019.

- (ISC)². Cybersecurity Workforce Study. International Information System Security Certification Consortium, 2023.

All sources verified December 2023. Regulatory frameworks and technical standards are updated regularly; always consult the lat est official publications for definitive requirements.